The AI Layer Cake

AI is all the rage these days, but how exactly does one invest in it? Here are some thoughts on what gets me excited.

Hi all,

Many people have asked me why I don’t have AI as a core investment thesis, despite having been in the space for 20 years, owning a PhD in AI applied to biology and running an AI company that got acquired. The reason is simple: AI is not a single thing but rather a multitude of different technologies.

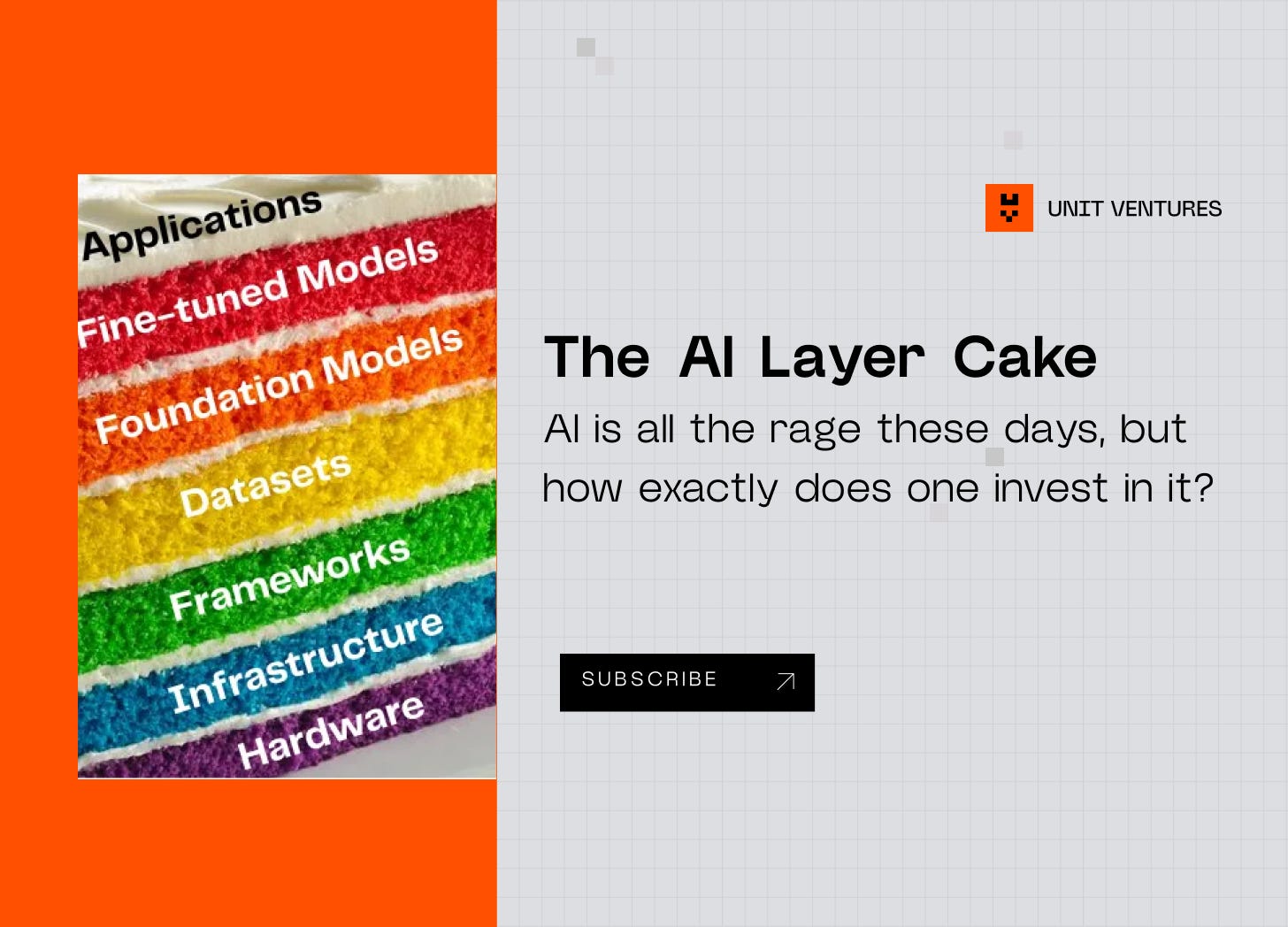

Indeed, underneath popular AI applications is a number of layers of technology, data and infrastructure that make it work. It looks something like this:

I’ll explain each layer and how I think about investing in it, starting from the top.

AI Applications

Many companies today that use AI are actually building applications on top of existing models. Just as you don’t need to build an OS or phone to create a mobile app, you don’t need to run your own infrastructure or train your own models to create an AI app. This makes this layer the most accessible of all. Basically, anyone can now build an AI application, even if they know nothing about AI.

AI in this case is not the differentiator, but it can open up amazing opportunities. Things like AI-powered biotech or AI-augmented design are incredibly useful. While this is not usually what I invest in (I prefer to invest in the underlying tech stack), I will occasionally make an exception if the use case makes sense.

Fine-tuned Models

Most AI applications can’t use large models off the shelf, they need to fine-tune them for their specific use case. For this however you need some amount of data (not that much though) to bias the model towards a given use case. For example, you can use a database of legal contracts to fine-tune ChatGPT into becoming an AI lawyer. Or you can use a database of icons to generate new ones on demand.

Fine-tuning is easy, since it’s usually offered as a service by big AI providers. What’s harder is finding good data, and a good use case. Often, AI application companies will actually be fine-tuning their own models, but I think there is space for some developer tooling here (e.g. data augmentation for fine-tuning), which is why I’m looking at this space.

Foundation Models

Foundation models are large models trained on vast amounts of data. They are used as the basis for fine-tuning with smaller amounts of data. The idea here is that since most natural structures –be it images or languages– share common patterns, you can reuse the larger, bottom part of an existing model and simply re-train the top part to adjust the model to your use case. Kind of like if you wanted to learn how to write a legal contract, you wouldn’t re-learn english from scratch, you would simply learn the new concepts involved.

To understand why foundation models are so hard to build, you need to consider how AI has evolved in the last 20 years. In the early days, models were simple and data scarce. This meant models had to incorporate handcrafted elements on human knowledge (called features) to have some sort of predictive power. For example, to predict the weather, you wouldn’t just feed the model raw meteorological data, you would instead pre-process this data and extract interesting patterns by hand such as precipitations, clouds etc. You would then feed that to a simple AI model, which would be able to make predictions. In a way, you would handcraft a foundation model yourself!

Over time, however, many techniques were invented to minimize the amount of manual pre-processing necessary: CNNs, LSTMs, reinforcement learning, GANs, and now transformers. What used to be a game of human design quickly became a game of data and infrastructure, as people realized that the quality of the models were correlated with their size.

“Bigger is better” however has a cost: you need enormous amounts of data, thousands of GPUs and tens of millions of dollars to train these mega-models, something that only a handful of companies can afford.

From an investment perspective, I think it’s a waste of time trying to recreate what OpenAI, Google or Microsoft have done with language and images. However, I think there are opportunities for startups to build foundation models in less-regarded areas, such as finance, climate or biology. What would a mega-transformer applied to genomics look like? Could we then use this model to design cures for rare diseases? I would be very excited to back a company creating the ChatGPT of biology!

Datasets

Building a foundational AI model is not just a question of algorithm and knowhow. You also need vast amounts of data to train the model. Language and images are good candidates for AI because you can scrape the internet to get billions of conversations and pictures (doesn’t Bing’s uncanny personality remind you of people on anonymous forums?). For anything else however, you’ll need to put your hands on a gigantic database somehow.

From an investment perspective, it’s rarely the case that a startup has exclusive access to an enormous dataset, as they are usually held by big companies that have been collecting them for years. Sometimes however, startups do strike a deal with those companies and build something out of it, in which case there is a potential moat that makes this investable.

As a side note, I expect big tech companies to go on a shopping spree to acquire companies owning valuable datasets that they can leverage to enter new markets such as finance or healthcare.

Frameworks

If you are going to build a model from scratch, you will need some powerful tools to do so. The easier to use and the more versatile those tools are, the more productive data scientists become. It lowers the barrier to entry to train large models, shifting the focus to the data and application layers.

While there are many frameworks that already exist (PyTorch, TensorFlow, …), there is space here to create new open source frameworks for specialized use cases. We see this happening already with the emergence of tools built specifically to train large transformer models. I am monitoring a bunch of projects currently to see which ones have the most developer adoption.

Infrastructure

Ok so you have data, you have brilliant people, and you have your framework. Now you need to actually run stuff, which is an altogether challenging task! Coordinating thousands of GPUs while keeping costs low is very difficult, and not something most data scientists are used to doing. This is why there are specialized infrastructure providers that offer training and inference as a service, automating the entire training and inference pipelines.

Unsurprisingly, the biggest players here are the big cloud providers (AWS, Azure, …), but there are some smaller players making a significant dent in the space, most notably HuggingFace. They executed a brilliant open source strategy, and became the de-facto hub where people host their experiments (and now their production models!).

I also think there might be some interesting players emerging in blockchain, as you can subsidize the cost of the infrastructure via network rewards, creating a competitive go-to-market vs incumbent cloud companies (Filecoin is a good example, where they can offer 50% cheaper data storage than Amazon).

Hardware

The AI revolution could not have happened without GPUs. Training models is so compute intensive that the venerable CPU simply couldn’t keep up. Following a picks and shovels strategy in a high growth market can be very profitable, as witnessed by NVIDIA’s meteoric rise in the last 10 years.

But the GPU no longer seems to be enough. Making predictions using large models is so expensive that most low-margin use cases wouldn’t be able to turn a profit. This is in part why Google and big advertisers are reluctant to use them for search: it would eat their margins! The solution here is to use dedicated AI accelerators, which lower the cost of operation of large AI models.

Over the years, many companies have proposed AI accelerators, with some level of success: Google TPUs, Graphcore, Cerebras, ... Adoption was moderate however, mostly because GPUs were good enough for most AI use cases. Things are different now however, and I think we will start to see massive demand for these accelerators.

One thing is certain though: we are going through a pivotal moment in AI that will create many great opportunities, both financially and for society!

If you enjoyed this post, please consider sharing it on social! I’m @randhindi on twitter.

Great post! Shared on Twitter too ;)

Very insightful thanks for sharing Rand 🙏